Codemotion Milan 2025 - Day 2

Day 2 of Codemotion Milan was packed with interesting talks. Just like in part 1, I'll take you through each talk I attended and give you my highlights for each session. You can expect to learn what makes a product win big, how to write an insightful postmortem, what serverless pitfalls to avoid, how far web API capabilities have evolved, why our jobs are probably safe from AI, and more!

Let's get started.

How GitHub Won

GitHub cofounder and GitButler CEO Scott Chacon delivered the day's keynote, based on his blogpost Why GitHub Actually Won - where Scott explains the two reasons GitHub rose above its competitors:

- Product

- Timing

One is controllable, the other one isn't. It's about having good taste at the right time. You need to know what to build and who for, and get in the market when people need what you're doing.

These two reasons are closely linked because the time you start building your product influences what you build. For example, GitHub bet big on git over other version control systems, and that bet paid off.

Product

Product makes your thing stand out among competitors. But how do you make the right product?

Taste. Taste matters.

Scott referenced Steve Job's quote about Microsoft:

The only problem with Microsoft is they just have no taste. They have absolutely no taste [...] I don't mean that in a small way, I mean that in a big way. They don't think of original ideas, and they don't bring much culture into their product.

Scott defined taste as originating from first principles, which he defined as:

A basic concept or assumption we know to be true that is not deduced from anything else.

A great tool for uncovering first principles is to ask “why”. The nature of a four year old can lead to profound truths. For that reason we should ask “why” about everything in our product.

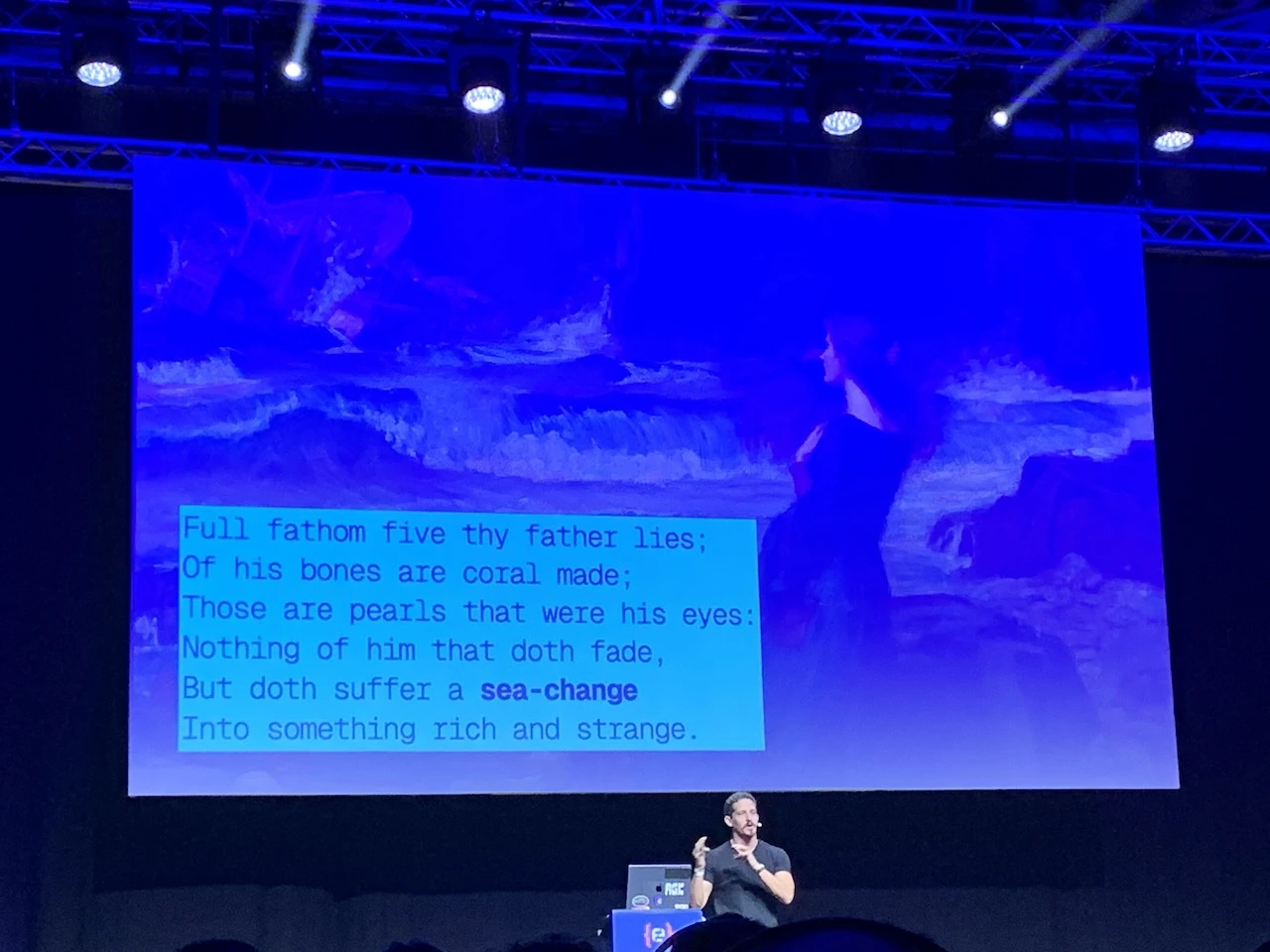

Timing

Timing is difficult to control, Scott mentioned the Newton as an example of a product that hit the market too soon. The right changes in the market and industry hadn't taken place yet for the Newton to succeed. Scott referred to these changes as “sea-changes”.

It's interesting to see what companies come out of sea-changes. Amazon is a great example of a company that started at the right time, during the internet sea-change. The mobile computing sea-change gave birth to the iPhone, which enabled companies like Uber.

GitHub navigated its own sea-changes. In 2005 the first line of code was committed with git. In 2008, using centralized version control systems was the norm. That worked fine until the rise of open source. In 2008 there were only 18,000 open source projects in the entire world, now there are over 280 million just on GitHub.

Scott demonstrated just how painstaking it was to contribute to an open source project using a centralized version control system like subversion.

To make a change to an open source project you'd need to:

- Download the project

- Copy the project

- Make the change you want in the copy

- Create a diff between the copy and the download and save it as a patch file

- Send the patch file to the maintainers

- The maintainers would then download the patch file and apply the approved change

Even though distributed version control was coming out on top in the beginning of open source, git wasn't the only option. Other systems like Mercurial were real players in the game. So why did git win in particular?

Scott said we can't be sure:

- Maybe it was Linux (created by Linus Torvalds), spreading its popularity to git (also created by Torvalds)

- Maybe it was GitHub

Either way, it's unclear where we're heading in today's AI sea-change, but this uncertainty gives us the opportunity to beat the tide by making something now.

Beyond Blame: The Art of the Postmortem

What do wildfires in California have in common with postmortems?

The Incident Command System (ICS) is a standardized approach to the command, control, and coordination of emergency response providing a common hierarchy within which responders from multiple agencies can be effective.

Riccardo Carlesso explained how ICS is used at Google, and how it's the first step in the art of writing a postmortem.

Riccardo identified three key moments in resolving an incident:

- The incident is detected

- A human is alerted

- The incident is resolved

Between the incident being detected, and a human being alerted, lies your monitoring and alerting system. That system's effectiveness is responsible for closing the time gap between phases 1 and 2.

Between a human being alerted and the incident being resolved, lies your incident management process. That system's effectiveness is responsible for closing the time gap between phases 2 and 3.

After the incident is resolved, your postmortem takes place.

Incidents should lead to postmortems and for that you need data. Riccardo mentioned the use of a living incident document anyone involved in the incident can contribute to. In it you keep track of everything anyone does, the reasoning, the result, and the time it happened.

But why do you really need a postmortem?

You need do it to:

- Prevent the issue from recurring ad infinitum

- Reduce the likelihood of stressful outages

- Avoid multiplying complexity

- Learn from your mistakes and those of others

Riccardo often repeated the important role of culture in incident management. A company needs a culture that fosters blamelessness,

as it in turn increases effectiveness. The way Google's culture addresses responsibility for an incident is by saying “you were close to the keyboard when the problem happened,

you're the best person to understand it”. Notice how impersonal that kind of language is.

Transparency in sharing mistakes also enables the publicization of postmortems,

benefiting the largest possible audience with its lessons.

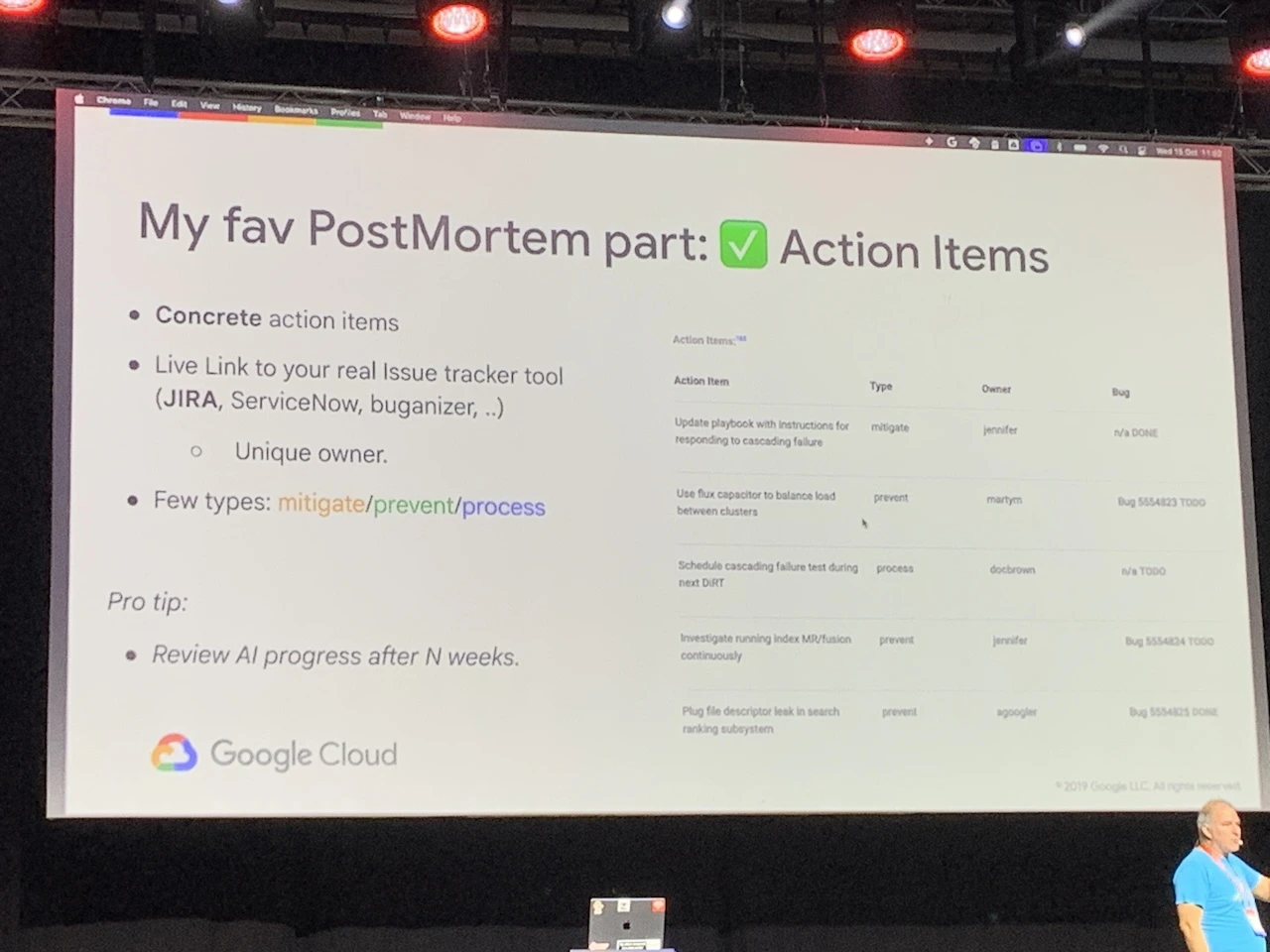

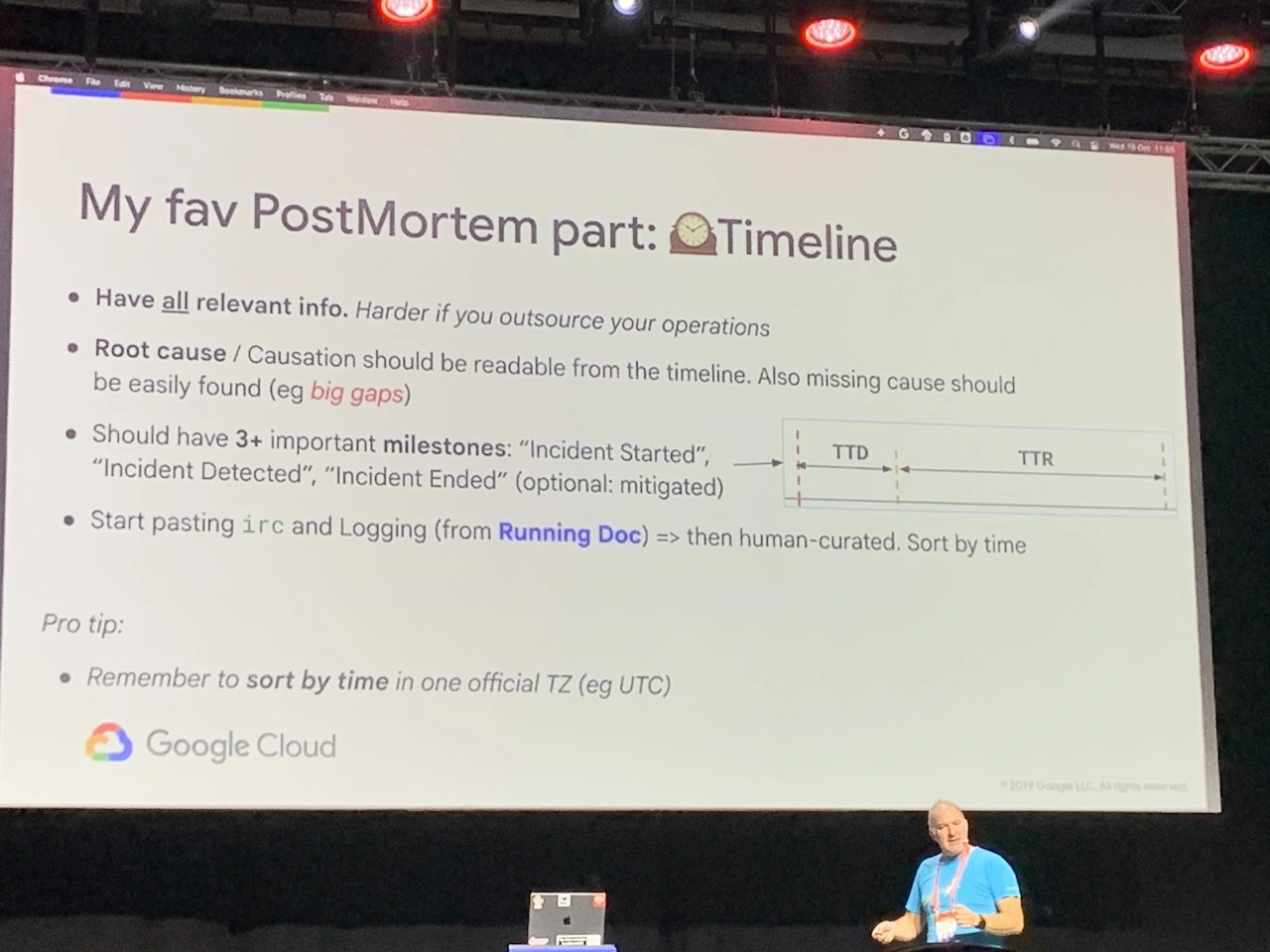

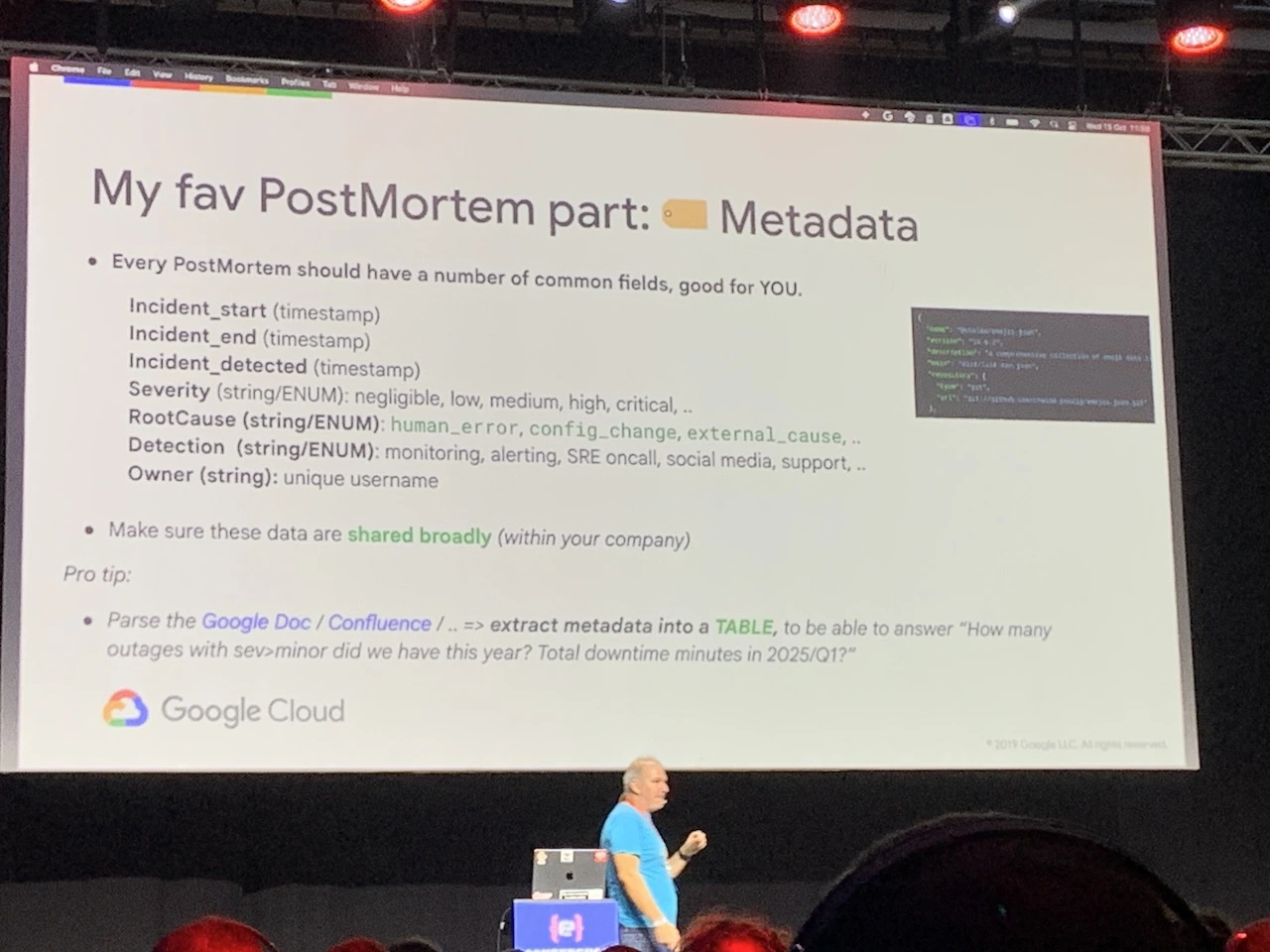

Riccardo highlighted the three core components of a postmortem: action items, timeline, and metadata.

Action items ensure a problem won't happen again.

Keeping a detailed timeline exposes any problem with cross-team dependencies.

Metadata enables useful insights. Riccardo mentioned that Google found that 63% of their incidents were due to config changes.

Riccardo shared an example postmortem demonstrating how to put these components into practice.

In the Q&A after the talk, someone asked how to handle stressful incidents. Riccardo replied that “if you're handling an incident, it usually means you have the training to handle it.” I found that answer insightful and reasurring.

Beyond Lambda: Serverless Pitfalls Nobody Warns You About

One of the last features I delivered at work was a 3D secure payment flow to enable fraud prevention. During the implementation our team ran into a critical issue: the backend was taking too long to determine whether the flow completed. The culprit was the AWS Lambda we were using and its cold-start time. I witnessed how the frontend, and the customer, can be impacted by backend architectural decisions. And I wanted to learn more, so I attended Avishag Sahar's talk. Vishi listed some of the traps you may run into with AWS Lambda, along with possible mitigations.

Trap #0 - Time Limit

Vishi started with the time limit trap at number 0 because of how common it is. AWS Lambda has a max runtime of 15 minutes, after which it throws a timeout error.

Possible mitigations include:

- using an asynchronous distributed system

- relying more on EC2

- using AWS batch

Trap #1 - Cold Starts

Cold starts “refer to the initialization steps that occur when a function is invoked after a period of inactivity or during rapid scale-up”. This can introduce significant latency in rare cases, as I experienced first hand.

You can remediate cold starts by pinging your lambda with a cron job, though this solution is not hermetic.

Otherwise there's the provisionedConcurrency flag which keeps n lambdas warm and ready.

Use this option carefully as in this case you'll pay a higher cost for idle time, which defeats the purpose of serverless.

In my case we solved this problem by migrating from AWS Lambda to AWS Fargate.

For more, see Understanding and Remediating Cold Starts: An AWS Lambda Perspective.

Trap #2 - Max Code Storage

Lambdas support a global max storage of 75 GB per region, a 4 KB env var limitation, and a 250 MB unzipped size.

Each Lambda deployment creates a new version, to save on storage you can use the

prune plugin which will keep the last n deployments in storage.

You may also leverage the zip and slim plugins.

Trap #3 - Shared Dependencies

Be aware that Lambdas do not share dependencies by default, therefore if you have heavy dependencies you can run into more deployment-size related issues.

You can resolve this using Layers, see Managing Lambda dependencies with layers.

Given these traps, Vishi listed some cases where a serverless architecture makes sense:

- ❇️ Event-driven

- ❇️ Short-lived & stateless

- ❇️ Embarrassingly parallel

- ❇️ Operationally lean

- ❇️ Pay-for-use friendly

And other cases where we'd want to avoid serverless:

- 🚨 Long-running

- 🚨 Tight latency

- 🚨 Heavy runtime

- 🚨 Large deps

- 🚨 Stateful

Possibilities with Web Capabilities

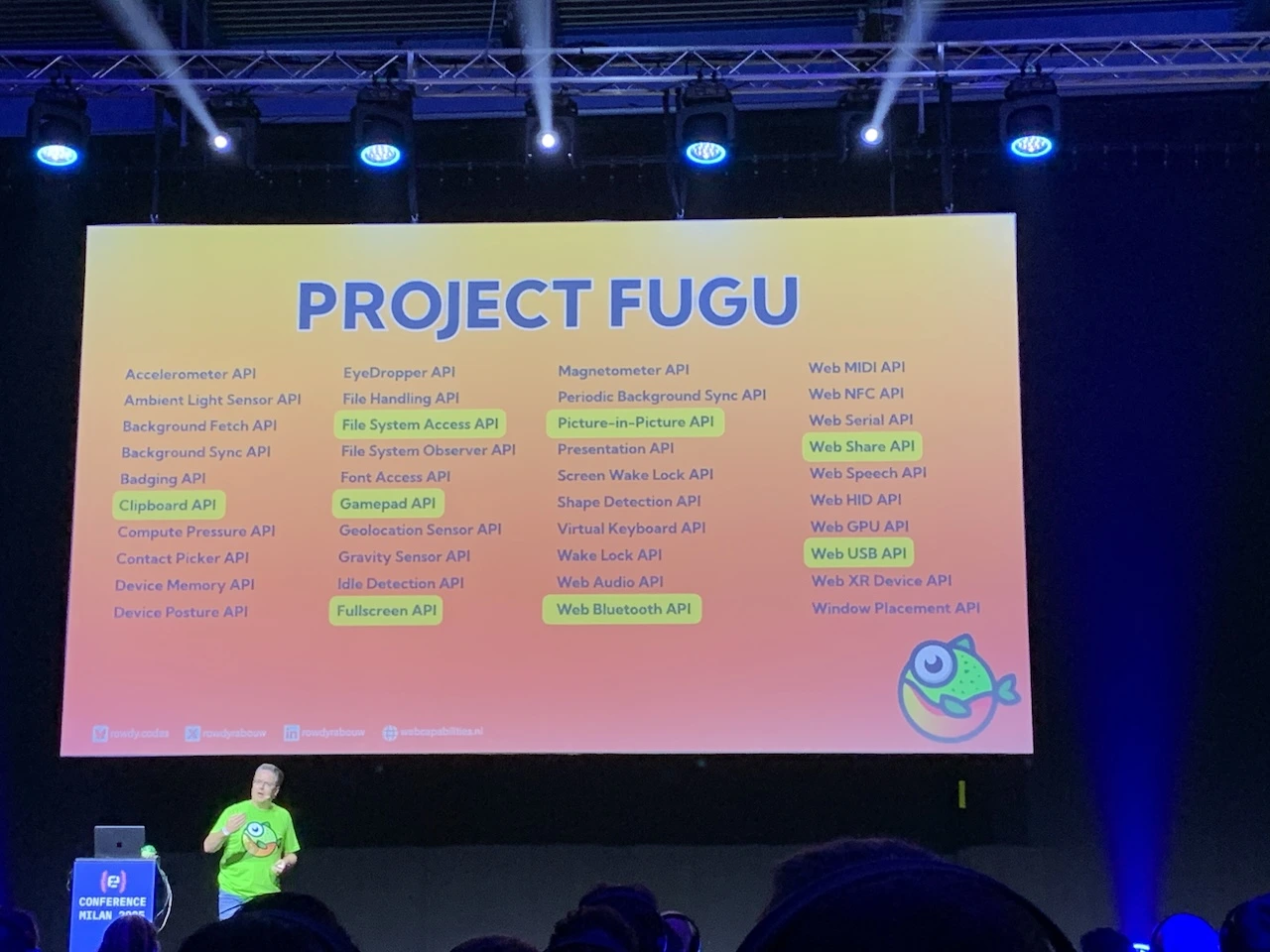

Why would a project pick the pufferfish 🐡 as its mascot?

This was the question I was asking myself at the start of Rowdy Rabouw's talk.

It turns out that the Web Capabilities project used to be referred to internally as project Fugu,

which means pufferfish in Japanese. And in Japan, the pufferfish is a delicacy. But if prepared incorrectly, it can be highly toxic and cause death.

Analogously, web APIs coming out of project Fugu are powerful and desirable, but must be handled with great care to avoid harming users (through privacy or security risks).

These APIs aspire to close the gap between native capabilities and those available on Web.

Rowdy showcased these APIs:

- Clipboard API

- File System API

- Gamepad API

- Fullscreen API

- Picture-in-Picture API

- Web Bluetooth API

- Web Share API

- WebUSB API

Rowdy impressed us by controlling a drone, using a playstation controller, through his browser. And made us all laugh when he connected a cheap printer to his browser, and asked everyone to write the best tech joke they had - printing out all the jokes we were writing in real time!

Just for fun here's a demo of the Web Speech API:

Beyond the vibe: Why we need more SWEs, not less, in the age of AI

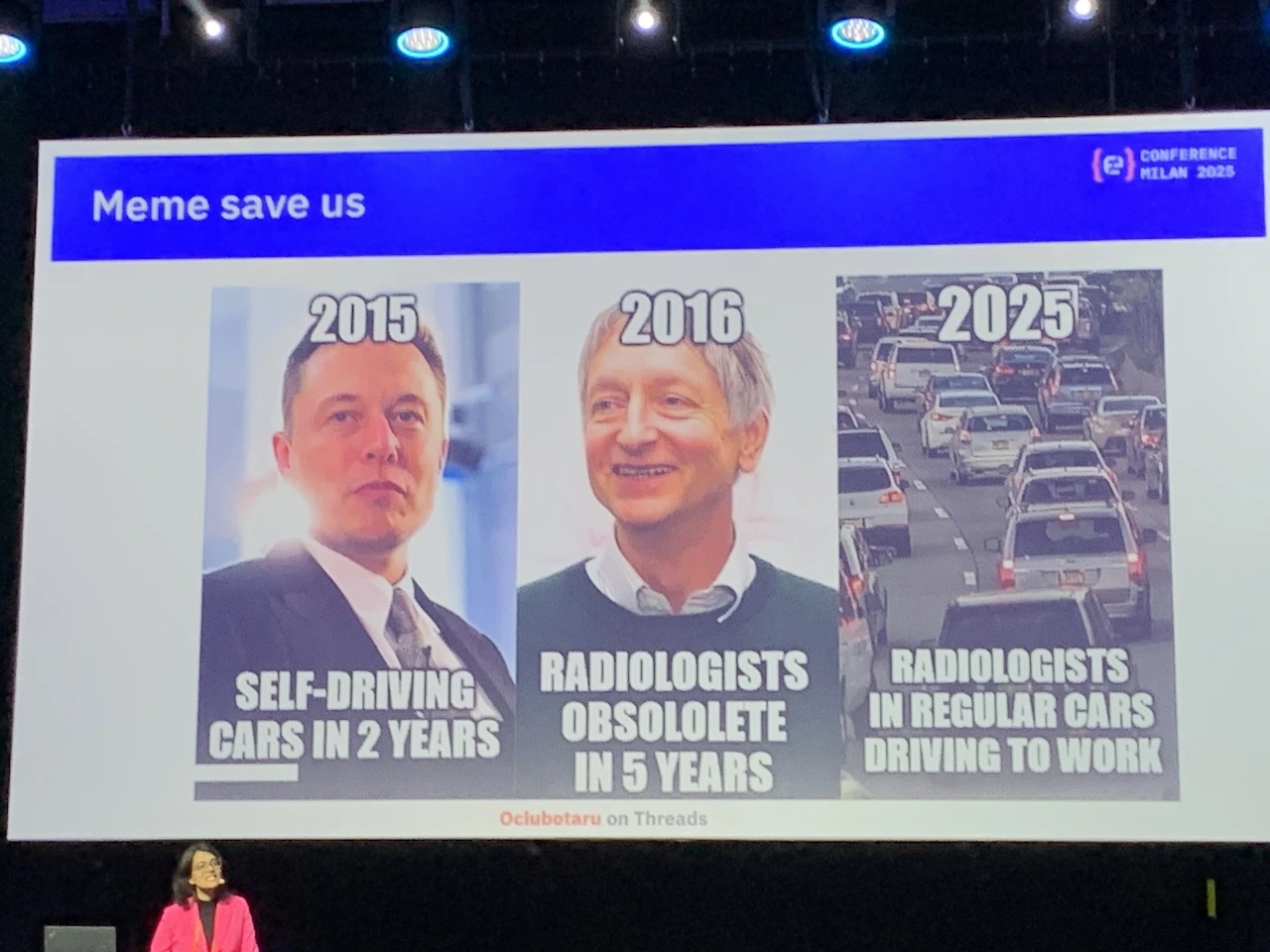

Shalini Kurapati's talk was a reality check on Generative AI. She began with a live poll asking if we thought AI would replace our jobs in 5 years. Over 70% of us responded “No” (me included), only 3% responded “Yes”, the rest were “Not sure”.

According to a June 2025 MIT report:

Despite $30-40 billion in enterprise investment into GenAI, …95% of organizations are getting zero return.

The problem is that AI is not magic, AI is just data + code + compute. It's heavily dependent on human data and human feedback.

The biggest challenge for AI is the data challenge, without data there's no AI, and most publicly available data has been used (synthetic data only helps to a point). And of the data that's been used, we've run into copyright problems and security risks.

Shalini challenged what the productivity gains we got were in exchange for these issues. Some gains turned out not to really be gains: favoring speed over quality led to AI slop and the acceleration of the dead internet theory.

Is a piece of work a productivity gain if it requires countless iterations, complete rewrites, or necessitates lowering the bar of what you consider good work? This is the case made in the Harvard Business Review article, AI-Generated “Workslop” Is Destroying Productivity.

Vibe coding can also be seen as the opposite of a gain. There are actual jobs for developers to clean up vibe code.

Shalini mentioned something I worry about: the risk of atrophy through delegating thinking, as explained in As AI gets smarter, are we getting dumber?

Consider that all real gains brought by AI until today have been accelerated by AI tools being cheap and heavily subsidized by VC funding. But this will not always be the case. ChatGPT has yet to make a profit, the AI financial bubble is real. There are no profits in sight but the immense infrastructure costs keep rising. All the GPUs will need replacement every 2 years, and massive amounts of freshwater are being consumed.

The problem is that it's hard to predict what will happen.

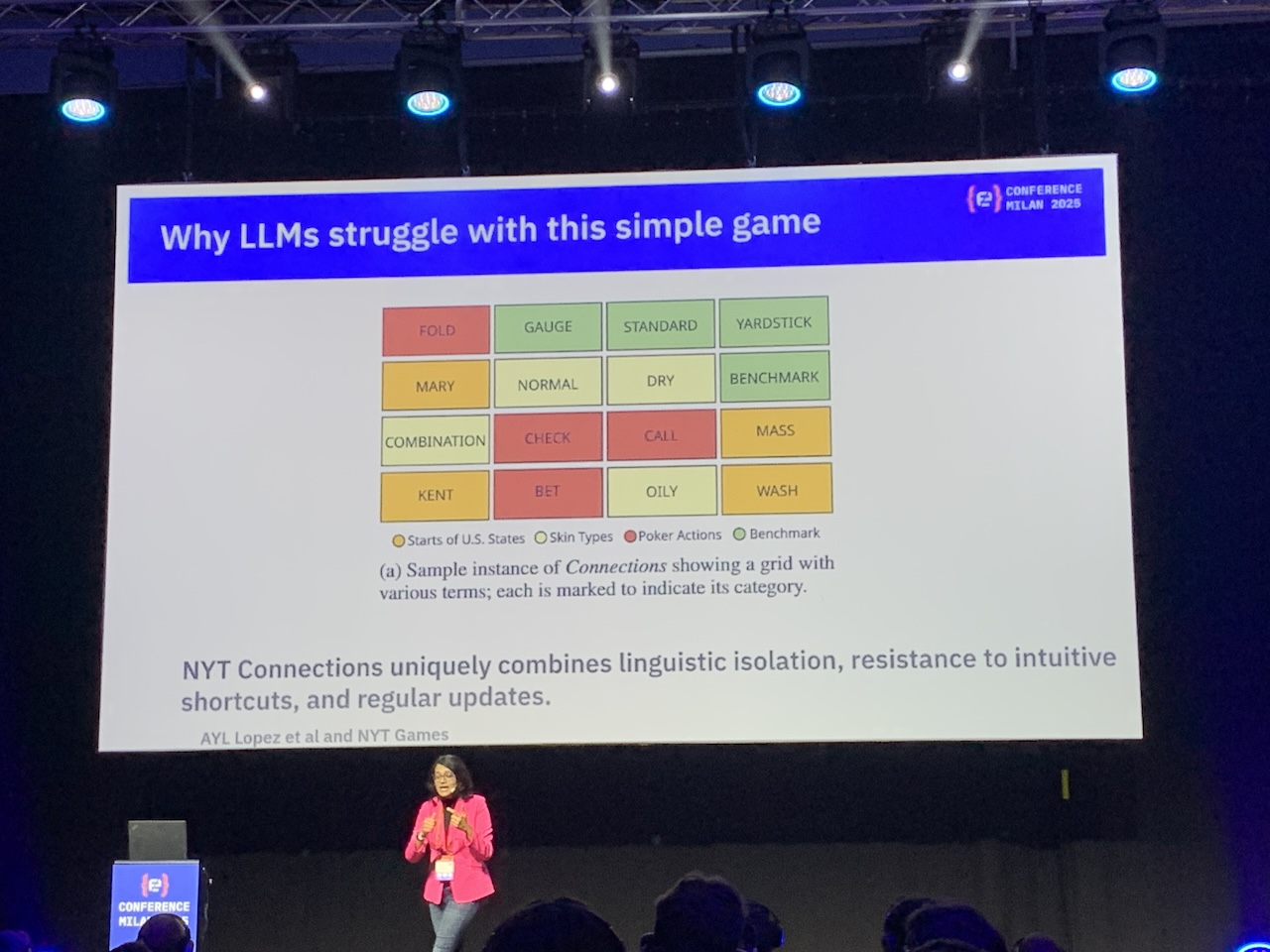

What is promising is that our jobs are here to stay for the foreseeable future. AI automates system 1 tasks but it struggles with intuitive thinking.

Abstraction, judgement, ethics, and teamwork all remain human. The limits of AI are exactly the gap that humans fill, and us software engineers do much more than code.

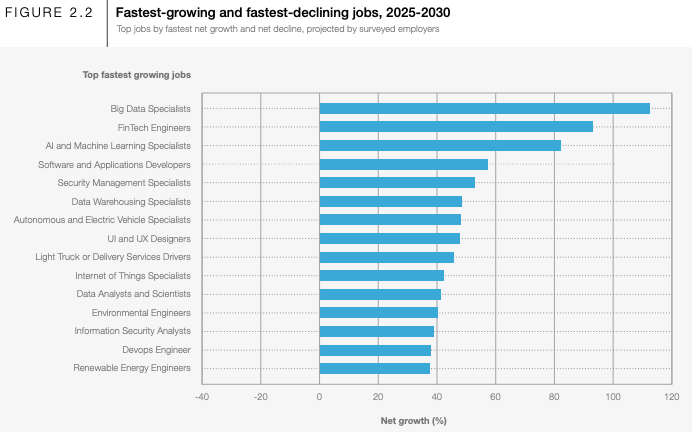

In fact in the World Economic Forum's 2025 Future of Jobs Report, regular software engineers remain at the top of the list for fastest growing jobs in the next 5 years.

Despite its limits though, AI is here to stay.

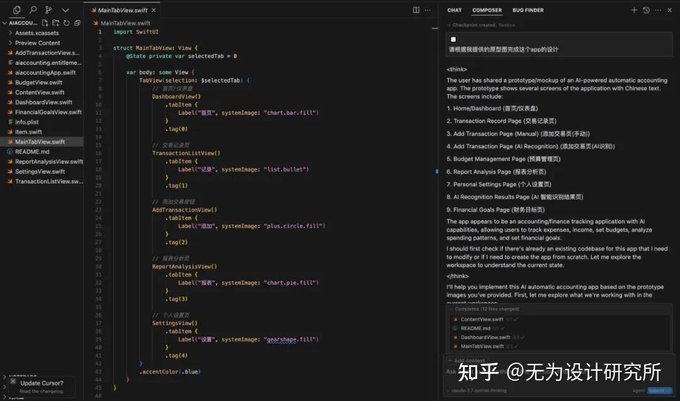

AI-ready Frontend Architecture

César Alberca showed us how to leverage AI for our needs. The focus of the talk was not AI though, it was good architecture, and how it enables easier interactions with AI in our codebases.

César defined architecture as “self limitations”. Architecture favors intentional change while reducing unintentional change. And human validated architecture leads to AI augmented development.

Kent Becks' four rules of simple design pave the way to good architecture:

- Passes the tests

- Reveals intention

- No duplication (of concepts, not of code)

- Fewest elements

Good architecture works in layers. César divided layers into:

- Domain, everything that relates to business logic

- Application, "what can I do?" -> handle state, validation, permission or roles

- Infrastructure/Delivery, "how am I serving my application to the user?" -> example: React

HumansAI works best when there is a clean structure that they can replicate.

Decoding the Cosmos: Voyager's 1970s Algorithms Meet Today's Cutting-Edge Tech

Day 1 of Codemotion opened with a keynote on CERN, and for my last talk, Pieter van der Meer brought us back to exploring the cosmos with the Voyager program's mission through deep space.

The scale at which these projects operate blows my mind every time. The Voyager mission time is at 48 years and counting, and we are communicating with them 25 billion kilometers away, which amounts to a two day latency.

The main topic of the talk was how communication is possible with the Voyager probes being so far away.

Pieter mentioned three key techniques for receiving data:

These algorithms, born in the era of drugs and rock and roll, are today complemented by advanced technology easing deep-space communication.

The gist of it is that the transmit power of each Voyager probe is far superior than that of of modern smartphones (about 8 times as powerful), but more importantly that when the weakened signal arrives to Earth it is detectable thanks to the advanced receiving technology of the Nasa Deep Space Network.

Conclusion

As each Voyager probe keeps silently sailing through the deep darkness of space at 60,000 km/h - the Codemotion conference, as well as this two-part series of blog posts, comes to end.

Given the quantity of information to digest, I wrote these articles to refer back to them and dig deeper into the many engaging topics discussed at the event.

Physically attending conferences is a rewarding experience and I recommend it to all software engineers, besides,

it's something AI can't do yet.