Discovering Application Containerization

Contents

1 Virtualization

1.1 Virtualization: A General Definition

1.2 Kernel Space vs User Space

1.3 Three Kinds of Virtualization

2 Virtual Machines and the Hypervisor

2.1 The Resource Optimization Hole that Containers Fill

3 Application Containerization

3.1 Multiple User Spaces that Share a Kernel

3.2 Container Runtime

3.3 Underlying Technology of Containers

4 Docker

4.1 Docker Images

4.1.1 What is a Docker Image?

4.1.2 Layers

4.1.3 The role of AUFS and OverlayFS

4.1.4 What’s the Point of Layers?

4.2 Back to Colossal Cave Adventure

5 References

I was watching High Score on Netflix, a documentary on video games, when I found out about Colossal Cave Adventure. I had never played a text-based adventure game before and I really wanted to try it out, so you can imagine how happy I was when I found out that a version of the game was open source. I cloned the repository and started installing the dependencies in order to build and play the game. However, I quickly ran into trouble with certain libraries…

I just wanted to try out the game, not worry about setting up my environment in order to build it first. But then! I made a fantastic discovery…

With one magical command I could magically skip all of this dependency nonsense and just be able to play the game right away:

docker run --rm -it quay.io/rhdp/open-adventure ./adventThe above command obviously only works if you already have Docker installed). I’ll get into the different parts of this command later in this post.

The thing is, after witnessing this sorcery I then asked myself, what really is this Docker magic? And how did we get to this point in time where I could wield such unimaginable power? In the rest of this post you’ll find out what I discovered on my quest to unravel the witchcraft lurking behind my terminal’s command line.

Virtualization

Virtualization: A General Definition

Our story starts with virtualization, as it will be a fundamental concept in the following discussion. Virtualization is a general term and there are different kinds of virtualization. In the context of this article we can say that:

“Virtualization involves replicating an operating system on a physical machine in order to create a virtual environment in which an application may run.”

From the point of view of the hosted application, the virtual environment should be indistinguishable from a regular operating system environment on a regular computer.

Kernel Space vs User Space

Before looking at the different types of virtualization, we need to be clear on what the kernel of an operating system is:

“Fundamentally the kernel is a software program in an operating system, it is always running and is responsible for all core interactions between hardware and software.”

The kernel is so important that it runs in a part of memory called kernel space, which is completely isolated from the part of memory where the applications meant for the user are located (user space). In terms of Colossal Cave Adventure, the game resides in user space but when the game needs user input from the keyboard it will have to interact with the kernel via a system call.

Three Kinds of Virtualization

Virtualization was first developed in the 1960s by researchers at IBM. Today there are three main types of virtualization [1]:

- Full-virtualization: virtualizes an entire operating system kernel.

- Para-virtualization: virtualizes a kernel that has been optimized for performance.

- Operating-system-level virtualization: does not virtualize a kernel.

As you may have noticed, what happens to the kernel defines the type of virtualization.

Virtualization didn’t just appear out of nowhere, creating a virtual environment as opposed to hosting an application directly on a physical machine provides many advantages. In fact, virtualization is at the core of modern cloud infrastructure.

Para-virtualization is a technique used by virtual machines (application virtualization). You’ve probably already heard of some para-virtualization technologies like VirtualBox or VMware. On the other hand, operating-system-level virtualization is a technique used by containers (application containerization). These are the two most common techniques used to host applications in the cloud.

So now we’ve got a grasp on what virtualization is, and we know which two techniques are most popular in today’s cloud infrastructure. Next, in order to contextualize my bewilderment with Docker and understand containers better, it’ll help to look at how things were done in the pre-Docker era with virtual machines (para-virtualization).

Virtual Machines and the Hypervisor

A virtual machine (VM) is an abstraction of hardware [2], each virtual machine simulates a physical machine capable of running applications.

Before containers, deploying applications on virtual machines was THE way to go. To deploy an application you have an image of a VM (basically a snapshot/copy of a fully configured operating system) ready to go (so as not to have to setup your VM with a script first), you spin up the VM, and BAM you’ve got an environment ready to host your application.

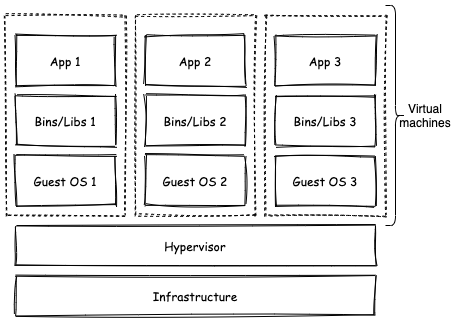

Virtual machines rely on a program known as a hypervisor which creates and runs virtual machines. A hypervisor sits between the host OS (the OS running on the physical machine) and the guest OS (the OS running in the VM):

The Resource Optimization Hole that Containers Fill

Each virtual machine in the above diagram replicates the kernel of an operating system. Do I really need an entire virtual machine just to run Colossal Cave Adventure?

As you can imagine, the overhead of creating a virtual machine just to host an application is pretty expensive, which is bad in terms of scalability. In fact, para-virtualization doesn’t work well with microservices-based architectures. Are you really going to spin up an entire virtual machine just to run a lightweight modular part of your application? With para-virtualization applications tend to be monolithic, it’s with the rise of application containerization that the potential of microservices was able to be more efficiently exploited.

We’ve now got the background to understand where containers come into play.

Application Containerization

According to Docker‘s website:

“A container is a standard unit of software that packages up code and all its dependencies so the application runs quickly and reliably from one computing environment to another.”

Multiple User Spaces that Share a Kernel

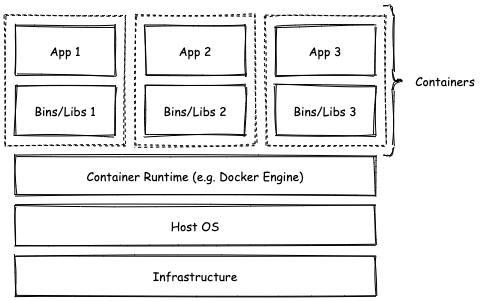

A container is an OS-level virtualization. As previously mentioned, this type of virtualization does not virtualize a kernel or emulate hardware in any way. The key difference between OS-level virtualization and the other kinds of virtualization is that although containers are isolated environments, containers share the kernel of the host OS. Since they share the kernel, containers have operating systems that do not have their own kernel.

The fact that the OS in a container does not need a kernel allows for extremely lightweight containers. In fact, a container can consist of a single root directory containing a single executable file, because not every container has an operating system inside.

Basically with virtual machines when we want to deploy an application we’ve got these big VM images but one day someone was like “wait… what if we separate the kernel from the OS?!?”. In this way, containers end up being operating systems with just a user space. So OS-level virtualization essentially allows you to run multiple user spaces that share the kernel of a host on a single machine.

Container Runtime

Just like virtual machines are managed by a hypervisor, containers are managed by a Container Runtime, which is a program that sits between the host operating system (OS) and the running containers:

In my case Colossal Cave Adventure is one of those apps enclosed in a container. As you can see, the game is already included with all the dependencies it needs to run. But in technical terms, what makes up a container? How are they possible?

Underlying Technology of Containers

While Docker was first released to the public in 2013, the underlying technology was around years earlier. The LXC (an operating-system-level virtualization method) was initially released in 2008 and allowed running multiple isolated containers on a host using a single Linux kernel.

LXC built on top of two key Linux kernel features [3]:

- cgroups: a kernel feature that manages the resource usage of processes.

- Namespaces: a kernel feature that partitions resources so as to be able to assign a certain set of resources to a certain set of processes, such that one set of processes sees one set of resources while another set of processes sees a different set of resources.

With a general understanding of containers, we can now move on to talk about Docker specifically.

Docker

As previously mentioned, the technology behind containers isn’t new. In fact Docker started out by using LXC. Docker built on top of LXC, added more functionality, and eventually dropped LXC, but more importantly Docker was capable of championing this technology and creating a new era of development moving away from the monolith and focusing on microservices known as cloud-native development.

Docker is more than a container runtime, it provides additional tooling and a unified API that makes it easy to work with containers. We’ll see some of the useful things Docker can do for us next.

Docker Images

What is a Docker Image?

As mentioned before, a key aspect of container isolation is that each container has its own stripped down operating system. This is provided to Docker containers via a Docker image, which is an immutable read-only file [4] which includes everything to run an application [5].

An image is constructed from a base image, which is a snapshot of a certain operating system (minus the kernel).

Container images can be stored in an image registry, which is a place where you can store and retrieve images just like you do with source code and GitHub. You can for example create a container based off of Ubuntu (and open a bash shell once the container starts up) by running:

docker run -it ubuntu bashNote that this will work even if you don’t have the ubuntu image downloaded, Docker will download it for you if it doesn’t find it on your computer.

While an image is a snapshot of an application and its environment, a container is a running instance of such a snapshot. By “running” the big difference I mean is that the container can be changed (you can perform operations in it like adding or removing files/directories).

Layers

Images are made up of layers, where each layer corresponds to a change made to a certain base image via a set of possible docker commands.

For example you can modify an image and commit the change (with docker commit), similarly to how source code is version-controlled with Git (you can even include a commit message and author name). Each “commit” is a change in the filesystem and corresponds to an image layer. Only the differences between filesystem snapshots are saved, just like only the difference between source code versions are saved in a regular commit. A stack of layers is basically a stack of changes to an initial filesystem.

Each of these layers cannot be changed, so how is it possible that if you run a container you actually can make changes in the container?

Note that the changes you make to a container are always thrown away whenever you restart the container. The reason why it’s possible to make changes to the filesystem in a running container is that when you run an image, Docker adds a write layer on top of all the read-only image layers of the Docker image.

So a Docker image is made up of layers, where each layer represents a change to the filesystem relative to the previous layer, starting from a base image. When you run a container you don’t have any clue that layers exist, so how are these layers combined to produce a final filesystem for your running container?

The role of AUFS and OverlayFS

Docker has historically used AUFS (Advanced Multi-Layered Unification Filesytem) as a union filesystem, which basically allows the overlaying of multiple filesystems. OverlayFS is a recent alternative to AUFS.

These tools make image layers possible and most importantly produce the final filesystem for a container using the aforementioned layers.

What’s the Point of Layers?

Other than conveniently providing a way to track changes made to an image, this layering system allows you to run multiple containers based off of the same base image. Docker reuses the layers containers have in common so that it doesn’t need to recreate the base image for each running container.

Basically if you have an existing container X that uses image A which occupies 50mb of memory, and you want to create another container Y. You can reuse image A to run container Y instead of downloading some other image, saving memory. When you run both container X and Y, both containers will have their own filesystem based off of all the image layers they have in common (obviously their final topmost write layers are not the same) [6].

Layers are also cached, all of this allows Docker to spin up containers in seconds (versus minutes for virtual machine).

One final note: while using images is clearly convenient, in reality you don’t need an image to run a container.

Back to Colossal Cave Adventure

We can finally get back to Colossal Cave Adventure and wrap everything up.

The docker command at the beginning of this article is the quickest way to start playing the game:

docker run --rm -it quay.io/rhdp/open-adventure ./adventWhat it does is:

docker runstarts a container- The

--rmflag removes the container once it is stopped. - The

-itflag allows us to open an interactive shell in the container. quay.io/rhdp/open-adventureis the image with which to create the container../adventis the command we want to execute in the container once it starts up.

Let’s break down some of these steps using some intermediate commands.

I can pull the relevant image from the Quay image registry with docker pull:

docker pull quay.io/rhdp/open-adventureI can check that I have the image downloaded:

docker imagesREPOSITORY TAG IMAGE ID CREATED SIZE

quay.io/rhdp/open-adventure latest f9bd4da91330 2 years ago 1.13GBI can create a container (named open-adventure) using the pulled image:

docker create --name open-adventure quay.io/rhdp/open-adventure081eccd52f1569b0d7b0634b3bce5bc0264c57e417cee8f382ddb04e13d118b9We can see that the container has been created with status Created:

docker ps -aCONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

032b27f4f03b quay.io/rhdp/open-adventure "/home/user/entrypoi…" 12 seconds ago Created open-adventureNow let’s start the container:

docker start open-adventureopen-adventureWe can see that the status has gone from Created to Up:

docker psCONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

081eccd52f15 quay.io/rhdp/open-adventure "/home/user/entrypoi…" 22 seconds ago Up 7 seconds 22/tcp, 4403/tcp open-adventureI can open an interactive bash shell in the container like so:

docker exec -it open-adventure /bin/bashI can confirm that the container is running a certain linux distribution:

cat /etc/*release*CentOS Linux release 7.5.1804 (Core)

NAME="CentOS Linux"

VERSION="7 (Core)"

ID="centos"

ID_LIKE="rhel fedora"

VERSION_ID="7"

PRETTY_NAME="CentOS Linux 7 (Core)"

ANSI_COLOR="0;31"

CPE_NAME="cpe:/o:centos:centos:7"

HOME_URL="https://www.centos.org/"

BUG_REPORT_URL="https://bugs.centos.org/"

CENTOS_MANTISBT_PROJECT="CentOS-7"

CENTOS_MANTISBT_PROJECT_VERSION="7"

REDHAT_SUPPORT_PRODUCT="centos"

REDHAT_SUPPORT_PRODUCT_VERSION="7"

CentOS Linux release 7.5.1804 (Core)

CentOS Linux release 7.5.1804 (Core) In this case the container is based off of the CentOS Linux distribution.

Knowing that the game is an executable named advent in my current working directory, I can execute this command in the running container to play the game:

./adventWelcome to Adventure!! Would you like instructions?

> yes

Somewhere nearby is Colossal Cave, where others have found fortunes in

treasure and gold, though it is rumored that some who enter are never

seen again. Magic is said to work in the cave. I will be your eyes

and hands. Direct me with commands of 1 or 2 words. I should warn

you that I look at only the first five letters of each word, so you'll

have to enter "northeast" as "ne" to distinguish it from "north".

You can type "help" for some general hints. For information on how

to end your adventure, scoring, etc., type "info".

- - -

This program was originally developed by Willie Crowther. Most of the

features of the current program were added by Don Woods.

You are standing at the end of a road before a small brick building.

Around you is a forest. A small stream flows out of the building and

down a gully.

> And now it’s time to play. :-)

References

- ^ Research paper: Virtualization and Containerization of Application Infrastructure: A Comparison

- ^ Web Article: What is containerization ?

- ^ Web Article: Software Containers: Used More Frequently than Most Realize

- ^ Web Article: Docker Image Vs Container

- ^ Documentation: Docker: Orientation and Setup

- ^ Web Article: Digging into Docker Layers