Kubernetes Overview

Why this post? I’m learning k8s at work, that’s why.

Goal: summarize what I’ve so far about Kubernetes, specifically regarding its various components and how they fit together.

Understanding containers is key to understanding Kubernetes. I’ve written a blog post that could serve as an introduction to containers.

Disclaimer: I’m new to k8s so I’m not entirely sure what I’m talking about. Read at your own peril.

What is Kubernetes?

At its core k8s is a tool to help manage containerized applications. From the k8s docs:

“Kubernetes is an open source container orchestration engine for automating deployment, scaling, and management of containerized applications.”

Kubernetes is often abbreviated as k8s (8 for the eight letters between the “k” and the “s”) and I’ll be using k8s often.

The K8s Cluster

Kubernetes runs containerized applications on Nodes, which can be physical or virtual machines. These Nodes are grouped together in a cluster.

A cluster is made up of two types of Nodes: a Master node and multiple Worker nodes.

Note that the term “master” is historical and the official k8s documentation specifies that it is a legacy term. This node provides an environment for the Control Plane to run in (I’ll get to what that exactly is later) and recently there’s been a push to replace “master node” with “control plane node” for inclusivity reasons so I’ll be doing just that in the rest of this post.

As you may have already guessed by their respective names:

- The control plane node controls the cluster.

- The worker nodes run the containerized applications.

Now let’s see each one of these in more detail.

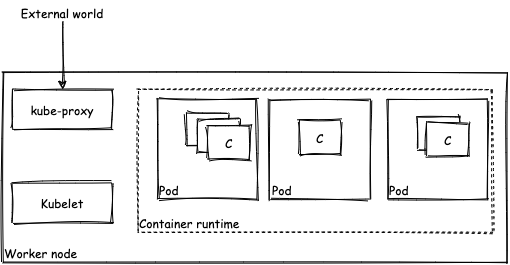

The Worker Node

The Worker Node is where your containerized applications will run. These Nodes host Pods which contain one or more containers in which your application runs.

A pod is a Kubernetes Object (which we will define later), it is the unit of deployment in Kubernetes. That is to say, if you want to deploy an application instance you will, at some point, surely need to define a Pod object to deploy in the cluster that will encapsulate the application.

As we’ll see with the Control Plane Node, the Worker Node has several components to it, each with a distinct role in servicing the Worker Node’s Pods.

These components are:

- The Container Runtime.

- The Kubelet.

- The kube-proxy.

The Container Runtime

Perhaps the easiest component to understand. The containers that run in Pods and the Pods themselves all run in the Container Runtime.

While Kubernetes is a container orchestration system, it doesn’t actually directly handle containers. K8s talks to the Container Runtime which itself implements and manages containers.

You’ve probably heard a lot about Docker (in fact K8s uses it by default), but there are other providers such as containerd that also allow you to use containers.

Kubernetes has set up an interface called the Container Runtime Interface (CRI). The Container Runtime must implement the model defined by the CRI, namely the Kubernetes Networking Model (which addresses the Pod to Pod networking challenge). The CRI allows any Container Runtime provider to provide a Container Runtime, be it Docker, containerd, or CRI-O.

The Kubelet

The Kubelet is the most basic component of Kubernetes. This is because when you define a Pod that you want to run on a Node, it is the responsibility of the Kubelet on that Node to take your definition for what you want to run and make sure that it is running.

Kamal Marhubi wrote a great post that dives into the technicalities of the Kubelet, check it out. Be warned, some of the links are dead and some of the flags used in some commands are outdated (though the correct commands and links are easy to find).

The kube-proxy

Simply put, the kube-proxy is a networking agent responsible for handling communications to and from the Pod with the rest of the cluster and also the outside world. This component isn’t incredibly important to understand in the context of this post, though in reality it is extremely important since when you deploy an application you’ll probably want to allow users to communicate with it.

And that’s it for the basics of a Worker Node.

The Control Plane Node

The control plane node is a machine that is responsible for managing the entire cluster, you can think of it as the brain of the cluster.

The most important feature of the control plane node is that it provides an environment for the Control Plane to run in. The control plane has several distinct components, each of them is an agent with a specific role in the management of the cluster.

The control plane components are:

- The API Server.

- The Scheduler.

- etcd.

- kube-controller-manager.

Let’s quickly look at what each of these components does.

The API Server

The API Server exposes the Kubernetes API, which allows you to interact with the cluster. Any commands you want to execute in the cluster will go through the API Server, it is the first point of contact for any such request.

Most importantly, the API Server is the only component that is allowed to communicate with the etcd, any other component wanting to communicate with the etcd must do so through the API Server. Any time a request is made through the API Server, the state of the cluster is saved before and after the request is processed.

The Scheduler

As the name implies this component is responsible for scheduling. From the Kubernetes documentation:

“In Kubernetes, scheduling refers to making sure that Pods are matched to Nodes so that Kubelet can run them.”

The Scheduler watches for newly created Pods with unassigned Nodes and then finds the best Node to assign to that Pod.

The heavy lifting it does is in the decision-making involved in picking the best Node for a Pod, which is performed according to certain scheduling principles (which you can customize). This scheduling is performed by comparing properties of the Pod with properties of each of the available Nodes.

The default scheduler for Kubernetes is called kube-scheduler. You can read more about the node-selection in the Kubernetes documentation.

etcd

Probably the most important component in the entire cluster. This is the real brain of the Control Plane.

Etcd is a key value store, nothing is ever deleted from it, new entries are continually appended. It stores the state of the entire cluster, including all Kubernetes Objects.

Every Object in Kubernetes is by nature ephemeral and stateless. The etcd is how the state of any Object is persisted in the cluster. E.g. if a Pod fails, the cluster doesn’t just forget your definition for the Pod. The state of the Pod is persisted in etcd so that a new Pod can be spun up that is identical to the one that failed.

kube-controller-manager

All objects in Kubernetes have two important properties: spec and state. The spec is defined by you when you define a configuration for an Object you want to run in the cluster, and it represents the desired state. The state is a property attached to the object by Kubernetes, which as the name implies, keeps track of the state of an Object at the current point in time.

The role of the kube-controller-manager is simply to look at the desired state vs the current state of an object, and ensure that the state matches the spec.

The kube-controller-manager runs controller processes, each controller watches at least one Kubernetes resource type (e.g. Pods, Nodes, endpoints).

The Kubernetes Object

I wanted to first introduce the various components that make up a Kubernetes cluster before defining a Kubernetes Object simply because by nature a k8s Object interacts with different important parts of the overall cluster.

Kubernetes Objects are how you define the desired state for a cluster, Objects also represent the current state of a cluster.

As mentioned before, the most important aspect of a k8s Object is the fact that it has a spec and a state. The Object is defined by a configuration file, which is where you declare the spec. The state of the Object is persisted in the etcd, and the kube-controller-manager is responsible for matching the Object’s state with its spec.

A Pod is a kind of Object, and there are multiple kinds of Object in k8s, such as Pod, Deployment, ReplicaSet, Namespace… In fact, kind is one of the required fields when defining a configuration for an Object. The required fields when configuring an Object are:

apiVersionkindmetadataspec

Example configuration file:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

apiVersion: apps/v1 # for versions before 1.9.0 use apps/v1beta2

kind: Deployment

metadata:

name: nginx-deployment

spec:

selector:

matchLabels:

app: nginx

replicas: 2 # tells deployment to run 2 pods matching the template

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.14.2

ports:

- containerPort: 80The above configuration file specifies two instances of an app (an nginx server) to be deployed in the cluster. Once you apply the appropriate command, the Scheduler will create the necessary Pods on the available Nodes. If any of the Pods fail, the Controller Manager will see the mismatch between the replica count in the configuration file and the Deployment’s state stored in the etcd. The Controller Manager will then be responsible for spinning up another instance of the nginx server so as to respect the desired number of replicas declared in the Deployment’s configuration file.